Why High-Performance Voice Agents Require Owning the Voice Stack

The explosion of voice AI has made it increasingly difficult to separate meaningful innovation from surface-level demos. Many vendors can show a voice agent that talks. Few can deliver one that feels fast, natural and reliable in a real contact-center environment.

One of the clearest differentiators of high-performing voice agents is whether the vendor truly owns the voice stack - or simply stitches together third-party components.

Performance, latency, and reliability are determined not by any one component, but by how the entire pipeline - from audio in to audio out - is designed, observed, and controlled. When that pipeline is fragmented across third-party APIs, technical issues become impossible to diagnose or fix.

This is why owning the stack is not an implementation detail. It is the prerequisite for building high-performance voice agents.

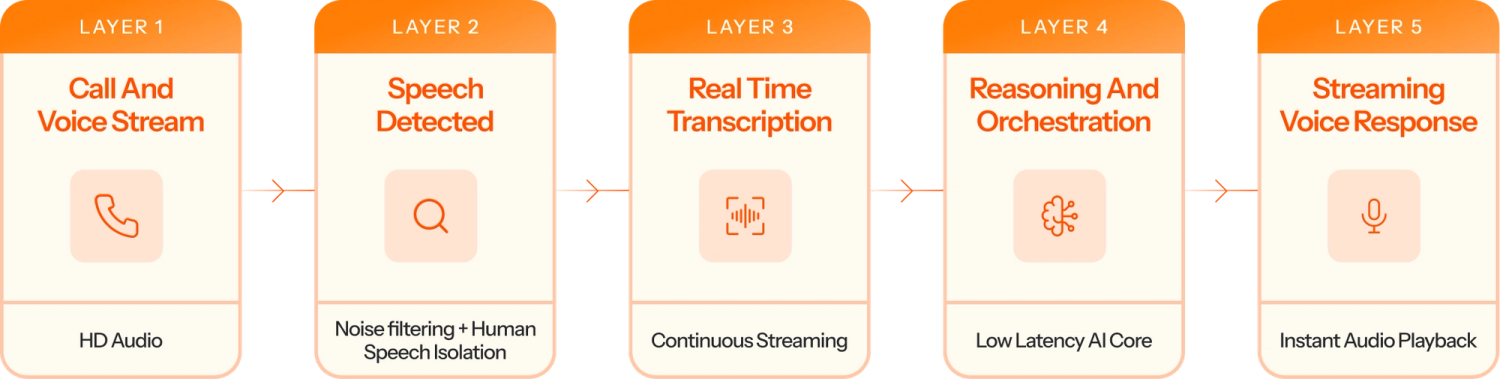

What a Modern Voice Stack Looks Like

A production-grade voice agent is a multi-layered pipeline that takes in user input, processes it intelligently and returns a coherent response, all in real time. At Level AI, we manage this pipeline across five tightly integrated layers.

1. Connectivity and Protocol Layer (SIP Stack)

The entry point of the system is the telephony layer, where calls are established and audio is streamed over IP. This layer handles Session Initiation Protocol (SIP) signaling and real-time media transport. Level AI operates its own SIP stack, which allows direct control over the Voice-over-IP stream before the audio reaches the AI system. This control is critical not only for managing packet loss, jitter, and routing decisions, but also for enabling seamless call transfers to human agents over PSTN and VoIP networks.

2. Input Pre-Processing and Voice Activity Detection (VAD)

Before transcription begins, the system must determine whether incoming audio contains human speech or is it just background noise. This is handled by Voice Activity Detection (VAD). Poor VAD leads to background noise being considered as speech, or users being unable to interrupt the voice agent. Level AI owns this layer, enabling millisecond-level detection of speech boundaries.

3. Real-Time Transcription (Speech-to-Text)

Our Speech-to-Text (STT) layer powers the transcription of the audio stream into text. In production voice systems, transcription quality is heavily influenced by accents, speaking styles, and industry-specific vocabulary - factors that generic, one-size-fits-all models often struggle with. Any transcription errors compound quickly in voice systems. At Level AI, we operate on a model-agnostic approach - This helps us leverage both our proprietary ASR model as well as multiple other best-in-class speech models. This allows us to adapt transcription behavior to customer-specific language patterns and domains, and continuously optimize for accuracy.

4. Cognitive Logic and Orchestration (LLM Layer)

The orchestration layer manages conversation state, applies guardrails, and routes input into the appropriate LLM logic. This layer determines when the system should respond, how it should respond, and what context it should carry forward.

5. Text-to-Speech (TTS Layer)

Finally, the system converts generated text back into audio. To avoid robotic pauses, Level AI optimizes TTS for both human-like voice quality and low latency. Rather than waiting for the full response to be generated, audio is synthesized and streamed back frame-by-frame as soon as the first segments are ready. In addition, our TTS setup supports multiple languages and accents, and can be personalized according to customer needs.

This entire pipeline must operate continuously, in real time, under unpredictable network and environmental conditions.

Where Voice Agents Break—and Why

Here are some recurrent failure points for voice agents

High Perceived Latency (“Awkward Silence”) that can come from multiple points in the voice pipeline—not just “slow models.” It could be due to delays in detecting end of a user’s utterance, or slowness in transcription or the LLM processing or conversion of text to audio. Without observability into the time taken by each layer, vendors can only report total response time - making it hard to isolate the root cause. At Level AI, we track millisecond-level “Time to First Byte” across layers - This makes latency traceable and controllable.

Premature Cut-Offs and Interruption detection Failures: If VAD is too aggressive, users are cut off. If VAD is too slow, the bot talks over the caller. Most third-party VAD APIs hide their internal thresholds. Because Level AI owns VAD, we can observe and tune the exact speech boundary signals that drive interruption handling, enabling more reliable interruption detection. In combination with our STT layer, this also allows us to personalize voice behavior for different accents, call types, and acoustic environments so the agent responds naturally.

Low Response Quality - This usually originates upstream: mistranscription, lost context, or LLM processing errors. Wrapper architectures obscure this. Level AI traces every call at the ‘turn’ level. When a response is wrong, we can identify whether the root cause was mistranscription, LLM issue, or a system prompt issue.

The Level AI Advantage

Ownership is not about building everything from scratch. It is about controlling the parameters that matter. At Level AI, ownership is defined by the ability to observe, tune, and replace components without breaking the system.

- SIP Layer: Owning the SIP stack helps integrate seamlessly with legacy SIP based CCaaS systems to modern gRPC/webRTC based CCaaS systems - This ensures smooth onboarding on our client side IVRs.

- Deployment Framework: Our modular framework allows full stack deployment in specific geographies (e.g., Frankfurt or Mumbai), reducing physical network latency and meeting data-residency requirements.

- STT and TTS: We are model-agnostic and leverage both our proprietary ASR model and multiple other best-in-class providers. This gives us the ability to select the best model for each customer based on latency, domain, vocabulary, and cost.

- VAD: Fully owned and tuned in-house to solve interruption handling—one of the hardest problems in voice.

- LLM Orchestration: Owned end to end to enforce business logic, safety guardrails, and brand-aligned behavior.

This balance of owning the critical layers while remaining flexible at the model level, is what enables superior agent performance.

“Owning the Stack”: Why does it matter?

Owning the voice stack means moving beyond "black-box" APIs to a model where we control the fundamental parameters of Perception (VAD), Transcription (STT), and Synthesis (TTS). It fundamentally changes what is possible by turning voice stack from an opaque pipeline into a measurable, optimizable system. Here’s what that enables:

- End-to-End Observability: When you own the stack, you can trace every call at each layer from VAD to STT to orchestration to TTS. Level AI’s stack could isolate failures to specific milliseconds across these layers, or conditions including noise patterns, accents, jitter.

- Enabling Fallbacks and Graceful Degradation: STT/TTS models evolve quickly, but swapping them is risky if it breaks custom logic and guardrails. A model-agnostic stack can help you switch to better models or enable fall back routing and safe-mode behaviors, for controlled degradation so a single component issue doesn’t collapse the entire experience.

- Edge Deployments: Processing location matters more in voice than almost any other AI domain. Physical distance introduces a latency floor that no model can overcome. Owning deployment allows us to place the entire voice stack close to callers, enabling human-like turn-taking.

- Continuous Improvement: By owning VAD and orchestration, we continuously refine interruption handling, accent robustness, and conversational flow. Improvements propagate across customers instead of being reset with each vendor update.

The Three Questions That Expose Wrappers

If you are evaluating a voice AI vendor, these questions separate stack owners from orchestrators:

- Can you show millisecond-level telemetry for the VAD-to-STT handoff in a single call? Good answer: detailed timestamps across layers. Bad answer: aggregate latency metrics owned by third-party APIs.

- Can we choose where voice processing—not just storage—is deployed? Good answer: full stack deployment in specific regions. Bad answer: a single global processing region behind a CDN.

- How do you integrate new models without breaking our custom logic? Good answer: pluggable models decoupled from orchestration. Bad answer: automatic vendor updates you cannot control.

The Bottom Line

High-performance voice agents are systems, not demos. Latency, reliability, safety, and quality are emergent properties of the entire stack—not any single model.

Owning the stack is the only way to see, fix, and continuously improve those properties.

Everything else is noise.

Want to know more? Sign up for a demo: https://thelevel.ai/request-demo/

Keep reading

View all